Imagine that every star in the sky is a memory. We can focus on only one particular area at a time, from a vague, slightly fuzzy image at the far reaches of our visual field, to the star directly in front of us. There are other stars, but we’ll have to move our eyes or turn our head to bring them into focus. In other words, we shift our attention from one star to another and the ones surrounding it fade into the background. Our memories, therefore, flit in out of awareness, depending on where we focus our attention.

Comparing memories to stars might seem unusual, especially if you’re used to seeing memory as a series of boxes and arrows, but this is a very simple demonstration of what Nelson Cowan calls activated long-term memory. Cowan’s Embedded Processes Model re-conceptualises working memory as the active portion of long-term memory, or the memory that is currently the focus of attention. Attention, therefore is crucial to working memory and, thus, learning.

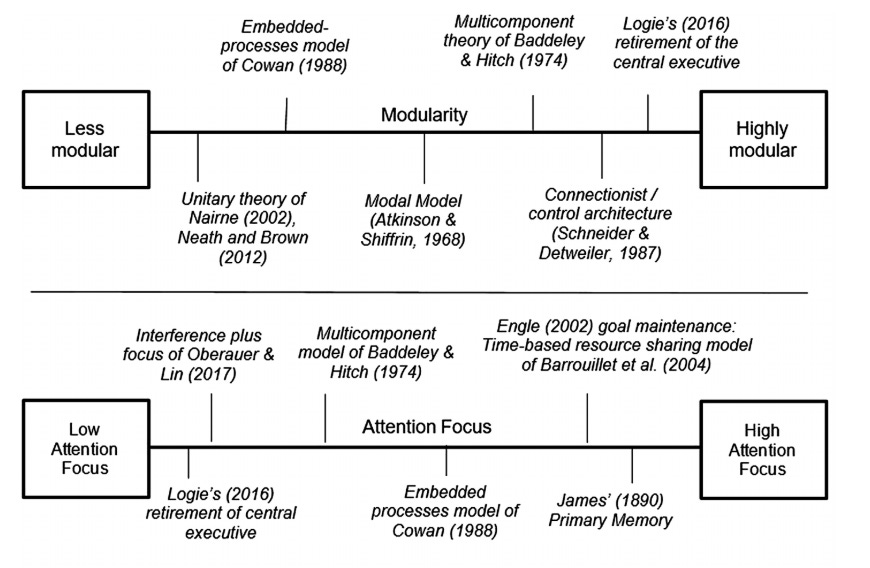

This approach to memory and learning falls towards the unitary end of memory models rather than on the modular or multi-component end, such as the model proposed by Atkinson and Shiffrin. There are other models, ranging from unitary to fully modular with differing emphasis on, say attention (see the diagram below for a very brief summary).

The history of psychology is the history of learning

In #16 I implied that our knowledge of memory systems can be viewed as a type of metacognition, but only if we apply this knowledge to how we, as individuals, learn new things. Sometimes repetition is the best method, such as learning guitar chords and developing new positive habits. But other types of learning require deeper processing and, consequently, the creation of knowledge structures that can be used in a variety of novel situations (see Craik and Lockhart). To fully appreciate this, we can benefit from understanding how the study of memory has evolved over time.

There’s a tendency to view memory research through the lens of the the so-called cognitive revolution. That said, many (e.g. Miller, Baddeley) have argued that it wasn’t a revolution at all, but a counter-revolution, a return to the work carried out by the early experimental psychologists of the late nineteenth century.

While research into memory certainly accelerated during this time, the likes of Aristotle, Plato , Cicero, Augustine and Lock had much to say about memory, and it was in Ancient Rome that the ‘art of memory’ evolved. During the Mediaeval period this art of memory remained highly valued and the ability to display advanced memory ability was seen as integral to the ‘merit and worth’ of a person.

A good memory was associated with a person’s ability to construct logical arguments, to understand scripture and laws and know how to conduct oneself. But there was also a growing interest in the details of memory and forgetting and how and why something learned today could be forgotten by tomorrow.

In his lecture to the Royal Society of London in 1682, the English polymath Robert Hooke introduced an early model of memory supporting the notion that newly learned information decays over time if not refreshed. But it would be the German experimental psychologist, Herman Ebbinghaus, who would empirically test this phenomenon some two hundred years later.

I’ve been critical of Ebbinghaus (and for good reason) but my lowly criticism doesn’t reduce the significance of his work. To truly appreciate it, we need to compare him to the grandaddy of them all, Wilhelm Wundt.

Wundt established the world’s first experimental psychology laboratory at the University of Leipzig in 1879. His lab provided all that was necessary at the time, including a series of well-equipped rooms and a steady supply of doctoral students.

Ebbinghaus’ early career was very different to that of Wundt. After obtaining his doctorate from the University of Bonn in 1873, he would cultivate his interest in human memory, an area for which he is best known. In 1880 he obtained an unsalaried position at the University of Berlin where he was able to continue his research.

Rather than a purpose built laboratory and an army of eager young students, Ebbinghaus worked alone, attempting to counter the prevailing view of the time that higher mental functions such as memory couldn’t be studied scientifically. This was clearly going to be a difficult task, not least because an experimental psychologist needs volunteers, and Ebbinghaus had none. He, therefore, opted to use the only person available - himself, a decision that would cause ripples of criticism throughout time, but one he had little other option but to take.

Just as important as his findings, perhaps, his studies took into account elements such as bias and potentially confounding variables.

For example, Ebbinghaus tested himself at the same time every day and made sure that his immediate environment was also kept constant, even to the extent of remaining mindful of not varying his daily activities in the hour or so before the tests. He kept the time constant by using a metronome, thus ensuring that he spent exactly the same time on each syllable, and said each syllable with the same voice inflection.

However it’s been argued that Ebbinghaus actually set memory research back, as early experimental psychologists focussed much of their attention on the learning of lists and nonsense words (generally referred to as verbal learning), to the detriment of other (perhaps more useful) endeavours.

Experimental psychology continued to flourish in German universities up until the 1930s, when psychology became weaponised as a means to support the view of German racial superiority. Not surprisingly, many psychologists in Germany decided it was a good time to leave and head to the United States. Unfortunately by then, America had embraced behaviourism and the German memory researchers found themselves in lowly academic positions in universities primarily in the southern plains states, leading to their work on verbal learning being labelled dustbowl empiricism.

In the UK, behaviourism never took hold in the same way it did in the United States, although it remained very influential. Bartlett had managed to resist the lure of behaviourism despite much of his most influential work taking place during the 1930s, while others (including Miller in the United States) would try and fail in their pretence to be seen as behaviourists.

The cognitive (counter) revolution

These early accounts of memory are certainly enlightening, yet it was the advent of cognitive psychology in 1950s that saw the greatest advancement in our understanding of how we are able to retain the vast amounts of information we are exposed to every second of the day. But even some of the seminal studies of the 1950s weren’t new, and neither were their findings.

For example, the amount of information held in short-term memory had been established by both Joseph Jacobs and Francis Galton in the nineteenth century, and both Galton and William James had already hypothesised memory as consisting of long- and short-term varieties (although they didn’t necessarily use these terms).

Atkinson and Shiffrin would examine evidence from the emerging discipline of neuroscience, as well as experimental psychology, to create their multi-store, or modal, model of memory in 1968. Baddeley and Hitch would then adapt the short-term memory component of the modal model so that it better explained experimental findings, culminating in the Working Memory model. Along the way, Ericsson would describe the development of expertise via a model of long-term working memory, Cowan did away with boxes and arrows and placed greater emphasis on attentional processes, while Barrouillet attempted to get to grips with the switching between attention and processing in his Time-based Resource Sharing model. Sweller and others working within a Cognitive Load Theory paradigm tried to explain working memory limitations by referring to most of these models at one time or another.

Can models make us smarter?

The strength of a model can be gauged by its usefulness. This is perhaps why Cognitive Load Theory has become so popular, not that it’s necessarily right, but because it’s useful, it takes established concepts and places them within a useable framework. However, there is, as yet, very little research on applying CLT to self-directed learning, being as it is a theory of instructional design and not a theory of cognitive load.

If I want to learn a new language, I also want to know how best to go about it (the metacognition part). Strict numerical limits of, say working memory, may or may not be useful because it often depends on the type of information or the skill to be learned. If I can group items together and associate them with what I already know (commonly referred to as chunking), I can increase this capacity and, in the real world, we’re less likely to be asked to learn a list random digits or nonsense words. Furthermore, we tend to chunk instinctively, at least to some extent.

But working memory isn’t just about how many chunks we can keep in place at any one time - it’s also about how limited resources are distributed across the task. Resources will be shared differently depending on your individual goals and limits may differ because some items are interfering with others.

Models of learning and instruction that take these aspects into account (such as CLT) are perhaps our best bet for now, but even these theories can’t explain all the contradictions that our memories throw up. The road to where we now stand was long and winding, and there are still many miles to go.

Twitter: marcxsmith